|

[NeurIPS 2023] [Arxiv] [Poster] [Code] [Data] |

Sihan Xu1*, Ziqiao Ma1*, Yidong Huang1, Honglak Lee1,2, Joyce Chai1

1University of Michigan, 2LG AI Research

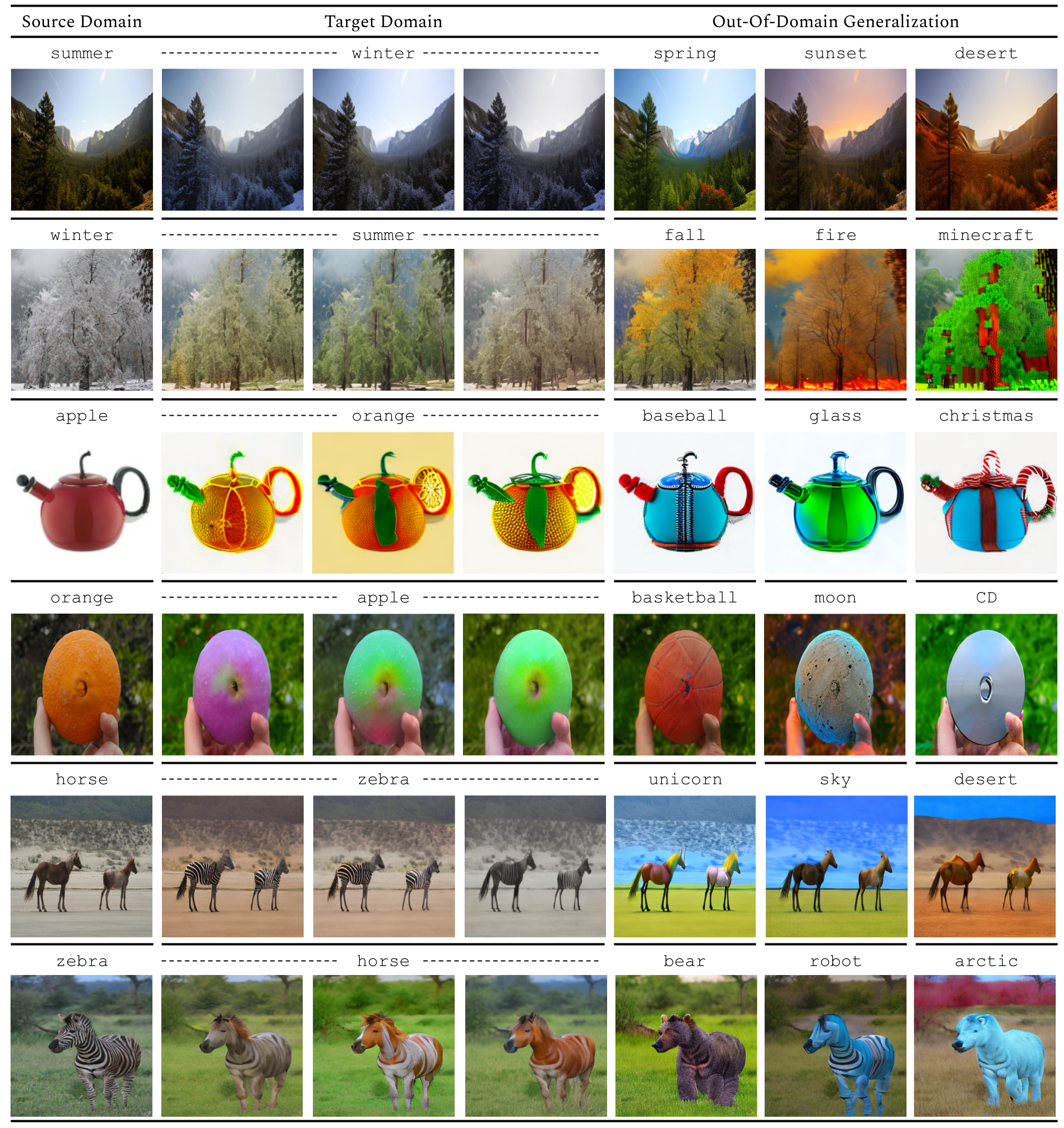

Diffusion models (DMs) have enabled breakthroughs in image synthesis tasks but lack an intuitive interface for consistent image-to-image (I2I) translation. Various methods have been explored to address this issue, including mask-based methods, attention-based methods, and image-conditioning. However, it remains a critical challenge to enable unpaired I2I translation with pre-trained DMs while maintaining satisfying consistency. This paper introduces Cyclenet, a novel but simple method that incorporates cycle consistency into DMs to regularize image manipulation. We validate Cyclenet on unpaired I2I tasks of different granularities. Besides the scene and object level translation, we additionally contribute a multi-domain I2I translation dataset to study the physical state changes of objects. Our empirical studies show that Cyclenet is superior in translation consistency and quality, and can generate high-quality images for out-of-domain distributions with a simple change of the textual prompt. Cyclenet is a practical framework, which is robust even with very limited training data (around 2k) and requires minimal computational resources (1 GPU) to train.

The image translation cycle includes a forward translation from \(x_0\) to \(\overline{y}_0\) and a backward translation to \(\overline{x}_0\). The key idea of our method is to ensure that when conditioned on an image \(c_{\mathrm{img}}\) that falls into

the target domain specified by \(c_{\mathrm{text}}\), the LDM should reproduce this image condition through the reverse process. The dashed lines indicate the regularization in the loss functions.

[The first row shows results of CycleNet, and the second row shows results of Fast CycleNet.]

[The first row shows results of CycleNet, and the second row shows results of Fast CycleNet.]

[The first row shows results of CycleNet, and the second row shows results of Fast CycleNet.]

[From left to right, we edit the images of empty cups to cups of coffee, juice, milk, and water.]

|

We show our ablation study on winter→summer tasks with FID, CLIP score, and LPIPS. When both losses are removed, the model can be considered as a fine-tuned LDM backbone (row 4), which produces a high CLIP similarity score of 24.35. This confirms that the pre-trained LDM backbone can already make qualitative translations, while the LPIPS score of 0.61 implies a poor consistency from the original images. When we introduced the consistency constraint (row 3), the model's LPIPS score improved significantly with a drop of the CLIP score to 19.89. This suggests a trade-off between cycle consistency and translation quality. When we introduced the invariance constraint (row 2), the model achieved the best translation quality with satisfying consistency. By introducing both constraints (row 1), CycleNet ensures better consistency at a slight cost of translation quality.

|

@inproceedings{xu2023cyclenet,

title = "CycleNet: Rethinking Cycle Consistent in Text‑Guided Diffusion for Image Manipulation",

author = "Xu, Sihan and Ma, Ziqiao and Huang, Yidong and Lee, Honglak and Chai, Joyce",

booktitle = "Advances in Neural Information Processing Systems (NeurIPS)",

year = "2023",

}